TVP WEEKLY: How does AI differ from a typical computer program?

MAREK GAJDA: Each program consists of two elements: a code that describes what to do and the database that it uses. The biggest difference is that in the case of AI, the program does not have much information about what to do, but its database is gigantic. It is called the “teaching set”. It is us who have to teach AI to perform specific tasks. Meanwhile, a typical computer program has everything provided in its code as if on a plate - both detailed instructions what to do and all data necessary to do it.

I will explain it on the example of chess. Using the classic approach, we would describe in a thousands of lines of code all the procedures of the correct game: the way to move the pawns and pieces, the allowed and forbidden moves, etc. Plus, commands for all specific situations, such as: before making a move, make sure that your pawn/piece is protected, and if there are many possibilities of movement, we add algorithms to choose the “lesser evil” or the “greater good”. The code would provide specific instructions how to win a chess game.

On the other hand, creating an AI for chess would primarily consist in preparing a set of all played games from important chess tournaments (available on the Internet), without indicating exactly what the program should do in a certain situation. It would be more like saying: watch these games carefully, and then play and win yourself – that’s your goal.

It still seems to be magic. Does it mean then that AI is capable of coding itself? And is it possible to extract from it a record of what and how it prepared and performed on the data set?

Not exactly, it is not really possible to look under the bonnet, especially in the case of new deep learning systems, where programs are provided with data down to the finest detail, and without its context. It is up to the machine to decide how to organize it and what weight attach to it. And the amount of this data is so huge that it is impossible for a human to understand precisely what the algorithm is doing.

SIGN UP TO OUR PAGE

SIGN UP TO OUR PAGE

Thus, AI can analyze data and based on this data it can draw conclusions. This leads to the creation of a permanent code that tries to act like the human brain - like a neural network. Let’s take for example two popular aps on our smartphones: one for recognizing car brands, and the other for recognizing plant species. They both work in the same way – we take a picture of an object, and the algorithm tells us what it is. They are both based on AI and are exactly the same: it’s a neural network that is supposed to learn to “recognize something”.

Can the user influence their operation? For example, as a botanist, I can see an app error in the classification of a plant and correct it for the benefit of other users…

Some programs allow us to correct the solutions given by the machine. Then it will add this answer to its “training set” as a new piece of information. However, there is not much programming work in AI to be done. There are, of course, engineers who develop AI, the bright guys that make up the underlying mechanism - the “system brain model”. And once it is created, the trick is to teach it what we want and to teach it well, that is, give good pictures of plants, with good descriptions, special cases, etc. After all, a flower may look different depending on the time of day or the conditions in which the photo is taken, which is the input for the application. This requires thorough knowledge, that is why specialist databases are created in cooperation with experts in a given field.

A few weeks ago, Google’s “superintelligent chat-bot LaMDA” developer Blake Lemoine claimed that his AI “became conscious”. Let’s put aside the question of what consciousness means, because each science has its own definition here. Instead, let’s focus on the technical stuff: what do you think happened there?

Artificial intelligence works in three areas. The first, basic and oldest, is the so-called business intelligence. It is used in large enterprises that generate huge databases, such as accounting, HR, marketing, sales, etc. Managers expect that after uploading these databases into AI, the system will see some correlations that are undetectable to the naked eye. Input is raw data, while output is data that is the result of an analysis. In business intelligence, the analysis is done by simpler mechanisms, called machine learning, which analyze data mechanically and do not approach it creatively.

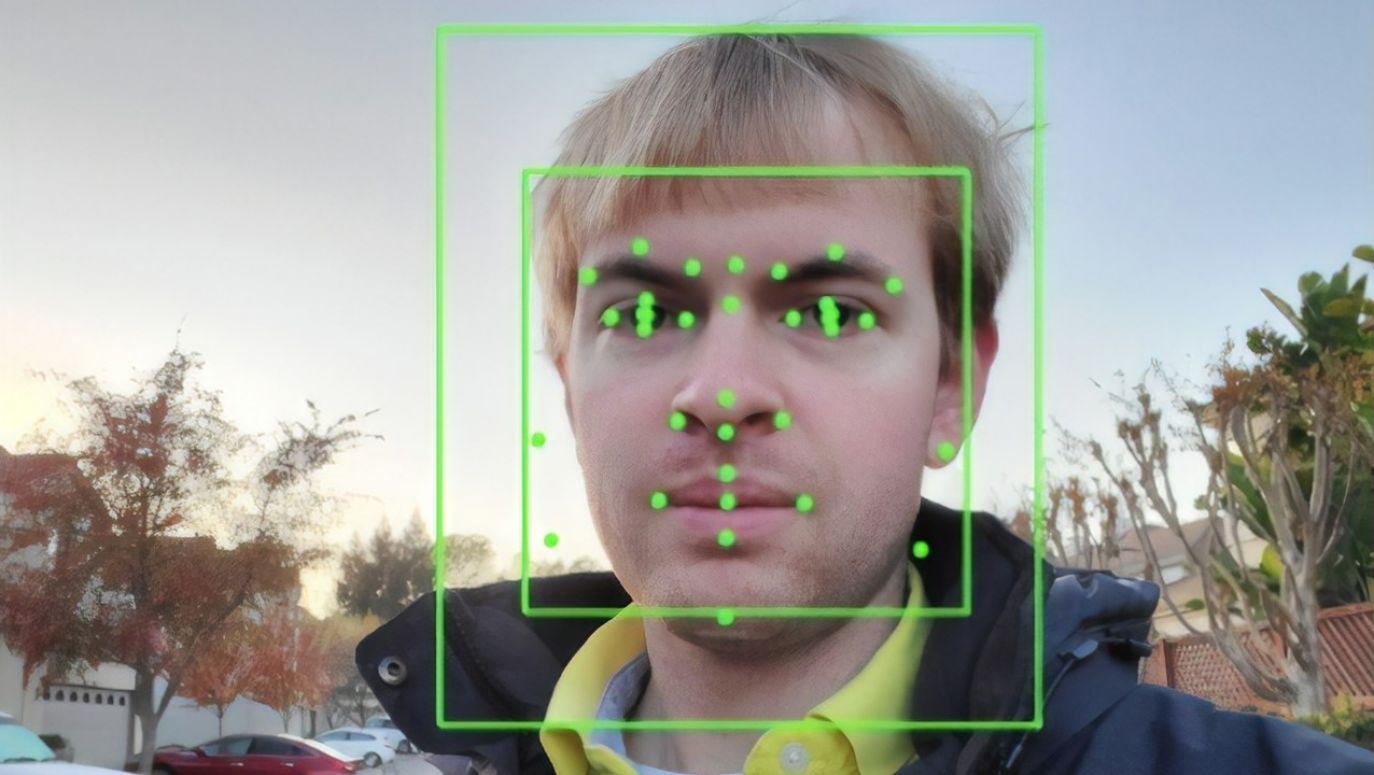

The second area of AI activity is the already mentioned recognition of things. To a different extent: from gadgets to serious civilian applications (such as cancer diagnostics based on imaging methods, or identification of people based on biometric data ) and military (e.g. recognition of military objects on satellite images). In this area, AI helps people do what they themselves can do, but unlike them, it does not get tired and, we assume, it is not guided by prejudices.

SIGN UP TO OUR PAGE

SIGN UP TO OUR PAGE

Thus, AI can analyze data and based on this data it can draw conclusions. This leads to the creation of a permanent code that tries to act like the human brain - like a neural network. Let’s take for example two popular aps on our smartphones: one for recognizing car brands, and the other for recognizing plant species. They both work in the same way – we take a picture of an object, and the algorithm tells us what it is. They are both based on AI and are exactly the same: it’s a neural network that is supposed to learn to “recognize something”.

Thus, AI can analyze data and based on this data it can draw conclusions. This leads to the creation of a permanent code that tries to act like the human brain - like a neural network. Let’s take for example two popular aps on our smartphones: one for recognizing car brands, and the other for recognizing plant species. They both work in the same way – we take a picture of an object, and the algorithm tells us what it is. They are both based on AI and are exactly the same: it’s a neural network that is supposed to learn to “recognize something”.