What really makes many people’s hair stand on end in this matter is the ability to control the mind, in which it is being read, without people knowing it, brilliantly captured by Stanisław Lem in “Solaris”. The very issue of creating social subordination with the use of tools much more modern than the mass media, even before the appearance of the latest decoder of thoughts from Texas, gave many sleepless nights. Today it could be, as in the unforgettable joke about Wiesław Gomulka’s speeches. “We stood over the precipice, but we took a big step forward”.

The authors of the publication in Nature Neuroscience themselves assure that “as brain-computer interfaces should respect mental privacy, we tested whether successful decoding requires subject cooperation and found that subject cooperation is required both to train and to apply the decoder”. This means that their machine cannot be used, for example, to monitor “thoughtcrime”.

Similarly, in the abovementioned opinion, Jerry Tang expresses his understanding of these fears and asserts: “While our brains give rise to our mental processes, we have a limited understanding of how most mental processes are actually encoded in brain activity. As a result, brain decoders cannot simply read out the contents of a person’s mind. Instead, they learn to make predictions about mental content. A brain decoder is like a dictionary between patterns of brain activity and descriptions of mental content. The dictionary is built by measuring how a person’s brain responds to stimuli like words or images”.

If fMRI imaging (depending on a large, medically necessary, and therefore hard-to-reach, and expensive machine) could be changed to something more practical and handy, such as functional near-infrared spectroscopy (fNIRS), which measures essentially the same thing as fMRI, i.e. the activity of specific areas of the brain, but with a lower resolution... It may not be available to millions, but it will be available to thousands! Why not?

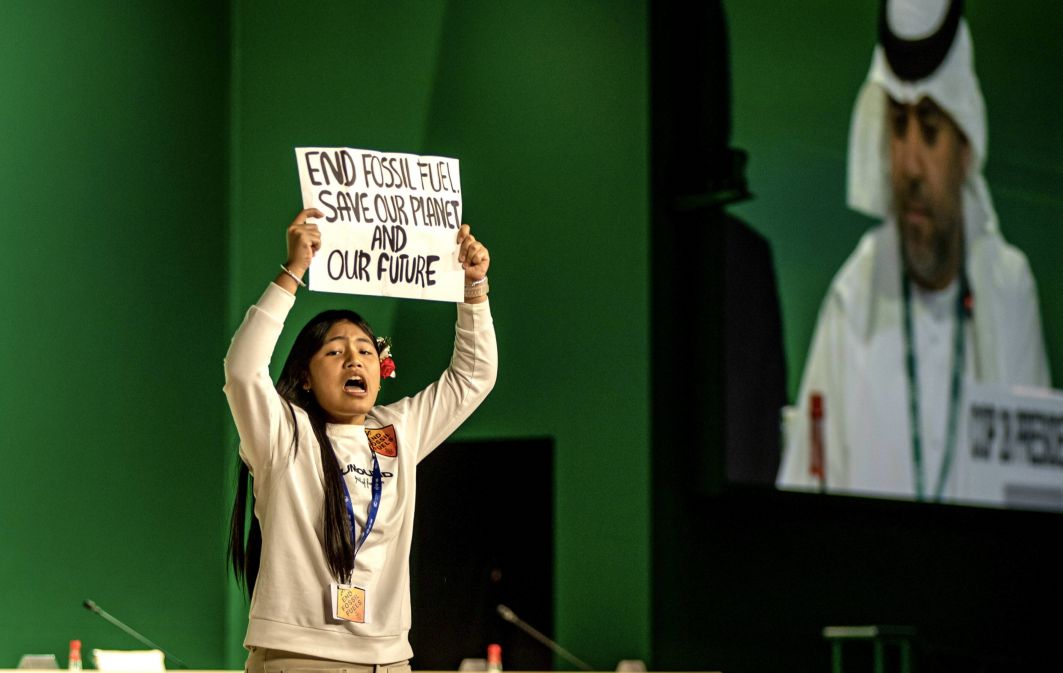

“We want to make sure people only use these types of technologies when they want to and that it helps them” – Jerry Tang asserts the press service of his university which is now sending his word to the world. A world in which on the one hand there are millions of people whom cerebral strokes and other disorders deprived of the ability to speak and communicate, on the other hand, in which state and corporate surveillance had reached a hitherto unknown extent.

Jerry Tang and his boss Alexander Huth have filed a patent application for their invention. They themselves therefore judge that the technology has a future. As is often the case of scientific breakthroughs, the point is that psychologists, lawyers & politicians responsible for the legislation don’t wake up when the milk is already spilt. The question of what these devices can be used for, and how to protect people’s privacy, must be regulated immediately.

– Magdalena Kawalec-Segond

– Dominik Szczęsny-Kostanecki

TVP WEEKLY. Editorial team and jornalists

Sources:

https://www.nature.com/articles/s41593-023-01304-9

https://www.statnews.com/2023/06/08/brain-decoders-mind-reading-research-ethics-privacy/

https://medicalxpress.com/news/2023-05-brain-decoder-reveal-stories-people.html

SIGN UP TO OUR PAGE

SIGN UP TO OUR PAGE

Already in 2019, i.e. four years ago (which the Cambridge genius didn’t live to see) a new way of extracting a person’s speech directly from their brain was developed. For the man’s intention to speak certain words can be picked up from the brain signals and converted into text quickly enough to keep up with natural conversation. We owe this discovery to a group led by Edward Change at the University of San Francisco. The work of the device which is able to decode a small set of listed words consists in a dense encephalographic reading. It was supposed to get rid of the toys that were used by Hawing. However, the circumpandemic breakthrough in the field of AI is currently putting the then innovations out to pasture.

Already in 2019, i.e. four years ago (which the Cambridge genius didn’t live to see) a new way of extracting a person’s speech directly from their brain was developed. For the man’s intention to speak certain words can be picked up from the brain signals and converted into text quickly enough to keep up with natural conversation. We owe this discovery to a group led by Edward Change at the University of San Francisco. The work of the device which is able to decode a small set of listed words consists in a dense encephalographic reading. It was supposed to get rid of the toys that were used by Hawing. However, the circumpandemic breakthrough in the field of AI is currently putting the then innovations out to pasture.